By Ruth Beran

If an emergency responder is given information about a little girl on the fifth floor of a damaged building, but instead remembers the girl is on the sixth floor, the child may die if the building collapses.

“What we want to do is try and prevent that from happening,” says Prof Deak Helton from the University of Canterbury.

Visual displays, such as Google Glass or the Recon Jet, are one way to provide information in situations like search and rescue, law enforcement, or firefighting operations, but the question is, how will these new technologies impact on performance?

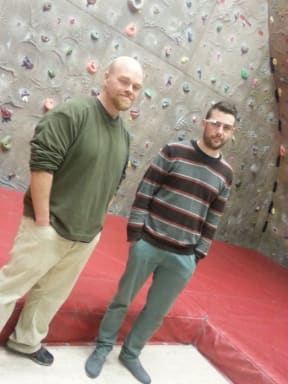

Deak Helton standing in front of the climbing wall, with Alex Woodham wearing Google Glass Photo: RNZ / Ruth Beran

Alex Woodham is building on previous research using a climbing wall at the University's Recreation Centre, to see how various conditions impact on people traversing (or moving back and forwards across) the wall.

Participants are asked to either simply climb, or to climb while completing another cognitive task. In the past, this has been an auditory task.

“So we’ve had them listening to words, and then after the climb they recall as many as they can,” says Deak.

Previous research has shown that people lose about half of the information they are asked to retain when they’re physically active compared with being non-active.

“If I give them 20 words when they’re seated they’ll remember about 12, when they’re climbing they’ll remember about 6,” says Deak.

In this new research, Alex is displaying words to participants on Google Glass, a type of monocular, see-through display that sits just above the right eye.

Traversing is used because it “emulates some of the things that search and rescue people might have to do,” says Alex. “So high-angle climbing or just general complex physical activity.”

It is also a very controlled environment while still focusing on complex physical activity, similar to scrambling over uneven terrain, climbing in and out of buildings, or up and down ladders. “Things that firefighters or other emergency responders would have to do would be very similar,” says Deak.

In this environment though, the researchers have very strict measures of how people physically perform and have more control over the setting which might otherwise be constantly shifting.

“It gives us that complexity and it allows us to examine how that works with cognitive load or cognitive functions and even how if effects things like memory,” says Deak.

One question Deak and Alex are trying to answer is how much information people can handle when they’re under physical, intense activity.

Google Glass Photo: Tim Reckmann CC BY-SA 3.0

“It might be very limited, and if that’s the case it means we have to handle that,” says Deak. And understanding the operators' limitations means their capabilities can be enhanced. “For example we could augment them with updated memory systems, ways to recall the information once they’re in. Maybe they don’t need to remember it, maybe the system remembers for them and then gives them that information later.”

In previous research it has been shown that information content can affect climbers, so fear related words triggered a fear response, where participants brought their legs and arms in to the centre.

“So we’re going to see if we can see any difference in the motion they will have climbing during periods around when the words are displayed and compared to samples from other periods during the climb,” says Alex.

A microphone will also be attached to participants who will repeat the words they see on the Google Glass.

“That will give us an idea of how much of the words they’re actually seeing and the timing of the words,” says Alex, “And we’ll be filming them as well, seeing how far they climb, how many holds they use, and then we’ll also be using that video for checking motion tracking to see how they move around.”

While it may seem obvious that performance while climbing would be affected if participants have to read words at the same time, Deak says it’s always good to remind people that they can’t do these things, because many people believe that they are immune. “When you talk to a lot of people in these environments they believe that [happens] for other people but not for themselves.” He gives the example of texting while driving, and how many people will do it themselves, even though they know it affects other people. A quantified knowledge of the impact of visual cues is critical, he says, so it can be regulated.

And in the future the paradigm Deak and his team have developed may be used to compare new technology like map information with a series of verbal commands. “We can actually compare which one is better for climbing. Not always are those things going to be easily distinguishable on raw common sense,” says Deak.