By James Gregory for the BBC

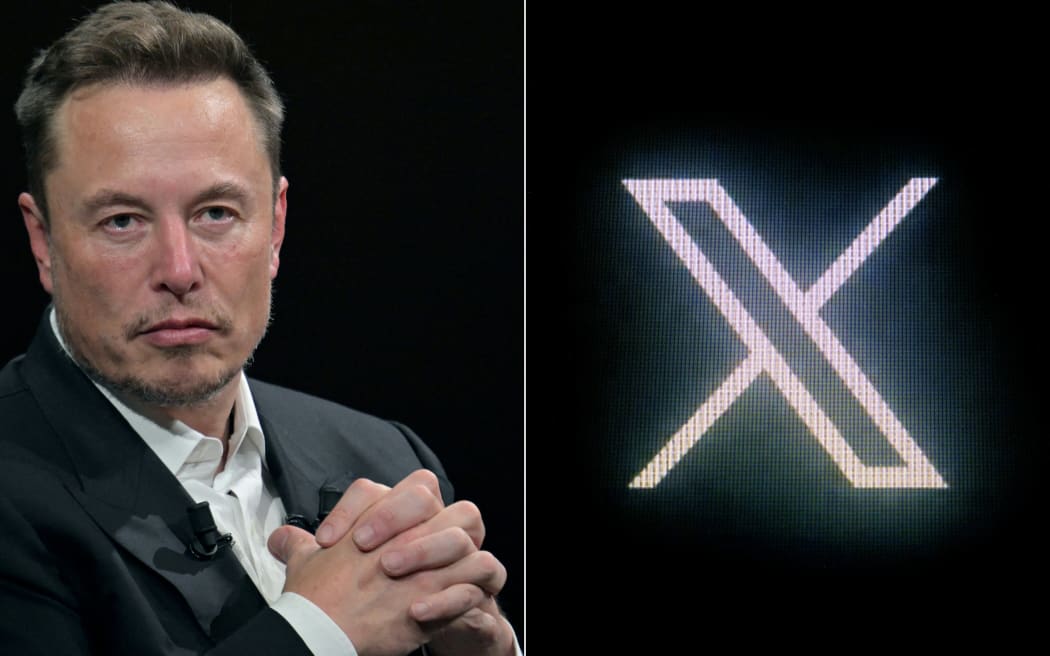

This combination of pictures created on 10 October, 2023, shows (L) SpaceX, Twitter and electric car maker Tesla CEO Elon Musk during his visit at the Vivatech technology startups and innovation fair at the Porte de Versailles exhibition centre in Paris, on 16 June, 2023 and (R) the new Twitter logo rebranded as X, pictured on a screen in Paris on 24 July , 2023. Photo: AFP / Alain Jocard

The EU is investigating Elon Musk's X over the possible spread of terrorist and violent content, and hate speech, after Hamas' attack on Israel.

The investigation, the first under the EU's new tech rules, will also look at the way complaints are handled.

X, formerly known as Twitter, said it had removed hundreds of Hamas-affiliated accounts from the platform.

TikTok and Meta have also been warned by the EU for not doing enough to tackle disinformation.

Social media firms have seen a surge in misinformation about the conflict between Israel and Hamas, including doctored images and mislabelled videos.

The EU's industry chief Thierry Breton confirmed on Thursday the bloc had sent X a "formal request for information" to determine whether the platform was complying with the Digital Services Act (DSA) - a law designed to protect users of big tech platforms which recently came into effect.

X CEO Linda Yaccarino said earlier on Thursday the platform had removed hundreds of Hamas-affiliated accounts and taken action to remove or label tens of thousands of pieces of content since Saturday's attack, in response to a letter from Breton on Tuesday.

At least 150 hostages were taken into Gaza and 1,300 people were killed during Hamas' deadly attacks in Israel at the weekend.

Hamas, a Palestinian militant group, is a proscribed terrorist organisation in the EU.

While more than 1,500 people have been killed in Gaza since Israel launched retaliatory air strikes.

The UN's World Food Programme has called the situation in Gaza "dire", with food and water running out during an Israeli siege. Israel said the blockade will not end until its hostages are freed.

In his letter to Musk, Breton said "violent and terrorist content" had not been taken down from X, despite warnings.

Breton did not give details on the disinformation he was referring to in the letter, but said instances of "fake and manipulated images and facts" were widely reported on the social media platform.

In his own response on X, Musk said: "Our policy is that everything is open and transparent, an approach that I know the EU supports.

"Please list the violations you allude to on X, so that the public can see them."

The DSA became law last November but firms were given time to make sure their systems complied.

On 25 April, the commission named the very large online platforms - those with over 45 million EU users - that would be subject to the toughest rules, among them X. The law came into effect four months later in August.

Under the tougher rules, larger firms have to assess potential risks they may cause, report that assessment and put in place measures to deal with the problem.

Failure to comply with the DSA can result in EU fines of as much as 6 percent of a company's global turnover, or potentially suspension of the service.

X has until 18 October to provide details on how its crisis response protocol is activated and functions, and until 31 October on other issues.

Musk dissolved Twitter's Trust and Safety Council shortly after acquiring the company in 2022. Formed in 2016, the volunteer council contained about 100 independent groups who advised on issues such as self-harm, child abuse and hate speech.

Meanwhile, a Meta spokesperson told the BBC the company was "working around the clock to keep our platforms safe" and had established a "special operations centre" staffed with experts to monitor the situation.

- This story was first published in the BBC